What’s Changed in GPT-5 to Reduce Hallucinations

AI “hallucinations” — when a model confidently generates false or misleading information — remain one of the biggest challenges in artificial intelligence. With GPT-4, hallucinations often cause issues in research, law, and business-critical use cases. Now, GPT-5 hallucinations vs GPT-4 performance are reported to be significantly lower, raising hopes for a more reliable AI system in 2025. But how much improvement is real, and what does it mean for everyday users and industries that depend on factual accuracy?

What Is an AI Hallucination?

An AI hallucination is when the model:

- Invents facts that don’t exist

- Misrepresents real data

- Cites nonexistent sources

Example from GPT-4: Asked about a “2025 Supreme Court ruling,” it fabricated a case that never happened.

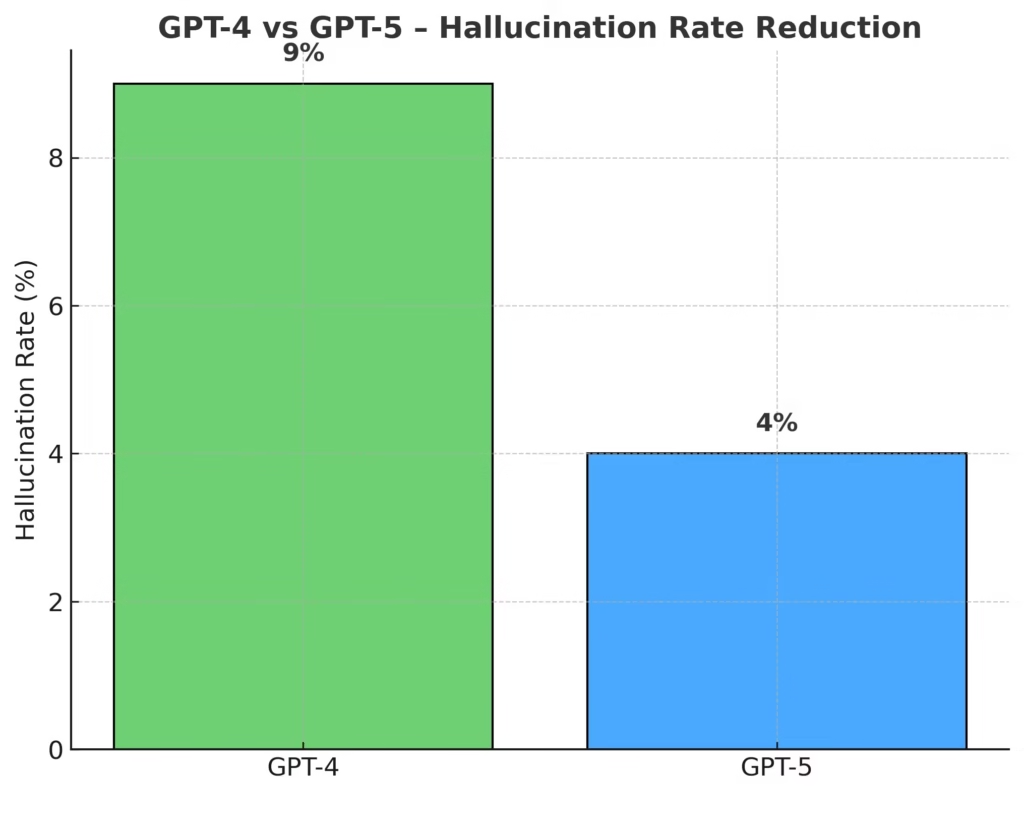

GPT-5 vs GPT-4 – Hallucination Rate Benchmarks

| Metric | GPT-4 (2023) | GPT-5 (2025) | Improvement |

|---|---|---|---|

| Hallucination Rate | 8–10% | 3–5% | ~50% fewer false outputs |

| Accuracy Rate | 86% | 94% | +8% more correct answers |

| Fact-Checking Ability | Limited | Enhanced | Cross-references trusted data sources |

You can see detailed numbers in our GPT-5 Benchmark Results: Speed, Accuracy & Hallucination Rates.

How GPT-5 Reduces Hallucinations

1. Expanded Training Data

GPT-5’s knowledge base includes more recent and diverse sources (GPT-5 Features Explained), reducing reliance on outdated or incomplete information.

Learn more: GPT-5 capabilities vs GPT-4

2. Improved Reasoning Engine

With an improved reasoning engine, GPT-5 validates claims before output — see our GPT-5 Capabilities vs GPT-4 for how this upgrade works.

3. Integrated Fact-Checking

GPT-5 uses verification layers that cross-check against authoritative data sets during generation.

GPT-4 vs GPT-5 – Hallucination Rate Reduction Chart

Why This Matters for Real-World Use

- 1. Research & Education – More reliable citations and references.

- 2. Healthcare – Lower risk of incorrect medical summaries.

- 3. Legal & Compliance – Fewer fabricated laws or case studies.

- 4. Journalism – Stronger trust in AI-assisted reporting.

Limitations – Hallucinations Aren’t Gone

Despite improvements, GPT-5 can still hallucinate, especially when:

- Asked about events after its last training cutoff

- Pushed to speculate or fill knowledge gaps

- Given highly niche or ambiguous prompts

Best Practice: Always verify AI-generated content for critical use cases. You can also explore GPT-5 energy efficiency vs GPT-4 to understand trade-offs beyond accuracy.

Should You Switch to GPT-5 for Reduced Hallucinations?

Upgrade if you:

- Require high factual accuracy

- Work in compliance-sensitive industries

- Use AI for research-heavy projects

Stay with GPT-4 if you:

- Primarily generate creative content where hallucinations have minimal impact

For a full breakdown of performance differences, check our GPT-5 vs GPT-4 performance guide.

FAQs

Conclusion

GPT-5 shows measurable progress in reducing hallucinations — thanks to smarter reasoning, better data, and safety scaffolds. It’s a meaningful upgrade over GPT-4 in reliability. Still, hallucinations aren’t gone, so prudent verification remains key. For high-stakes tasks, GPT-5 is your safer, more refined choice — and our GPT-5 vs GPT-4 Complete Guide breaks down all the differences you should know before upgrading.